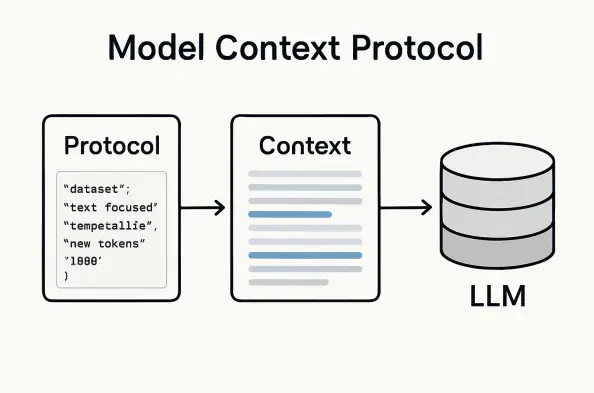

Imagine a world where connecting artificial intelligence assistants to vast arrays of external data and tools is as easy as plugging in a USB drive, yet the very simplicity that makes this possible also opens the door to a labyrinth of risks and roadblocks. This is the reality of Model Context Protocol (MCP) servers, a technology celebrated for its speed and ease in linking large language models (LLMs) to the resources they need to function. At first blush, MCP appears to be a silver bullet for enterprises eager to harness AI’s potential. However, beneath the surface lies a daunting truth: while setting up these servers is a straightforward task, ensuring they remain secure and can scale to meet real-world demands is anything but simple. Drawing from the insights of engineers, CTOs, and security researchers, this exploration uncovers the hidden complexities of MCP deployment. It’s a journey through the protocol’s initial allure and into the gritty challenges that emerge when theory meets practice in high-stakes environments.

Unpacking the Appeal and Pitfalls of MCP Design

The Lure of Quick Setup

The brilliance of MCP lies in its accessibility, a feature that has captivated developers looking to integrate AI agents with external systems in record time. Designed with simplicity at its core, the protocol allows even those with basic technical skills to establish connections between LLMs and diverse data sources or tools without wading through endless lines of complex code. Industry voices, such as Mohith Shrivastava from Salesforce, often point to MCP’s value in rapid prototyping and early-stage projects. In these settings, where the goal is to test concepts rather than build fortified systems, MCP shines by cutting down development timelines dramatically. This ease of use has made it a go-to for innovators eager to experiment with AI-driven solutions, offering a low barrier to entry that fuels creativity and speeds up the ideation process from zero to one.

Moreover, the appeal of MCP extends beyond just speed; it democratizes access to advanced AI integration for smaller teams or organizations lacking deep resources. Unlike more intricate frameworks that demand extensive expertise, MCP’s plug-and-play nature means that a functional server can be operational in a matter of hours, not days. This efficiency is particularly beneficial in controlled, small-scale environments where the focus is on proof-of-concept rather than long-term reliability. Experts note that for startups or research labs, this quick setup can be a game-changer, enabling them to pivot rapidly based on initial findings. Yet, as enticing as this simplicity sounds, it’s only the first chapter of a much larger story—one where the plot thickens significantly when the stage shifts to real-world application.

The Reality Behind the Facade

However, the honeymoon phase with MCP often ends abruptly when the transition from sandbox to production begins. What seemed like a seamless solution in testing reveals itself as a fragile structure under the weight of live demands. Anand Chandrasekaran from Arya Health underscores this stark contrast, emphasizing that while a server might come together effortlessly, surviving the relentless pace and unpredictability of a live environment is a far tougher beast to tame. Issues like erratic traffic spikes and dynamic user interactions expose flaws that were invisible in controlled settings, leaving developers scrambling to adapt a system that wasn’t built for such chaos.

Furthermore, the very design that makes MCP so approachable also sows the seeds of its downfall in execution. Without inherent mechanisms to handle the nuances of enterprise-grade operations, the protocol often buckles when faced with real-time challenges. Production environments demand robustness—something MCP lacks in its raw form. Reports from the field highlight frequent breakdowns in performance when servers encounter unexpected loads or ambiguous requests from AI agents. This gap between initial promise and operational grit forms the crux of why MCP, despite its allure, remains a risky proposition for those who fail to anticipate the hurdles beyond setup.

Navigating the Security Maze of MCP Servers

Exposed Weaknesses in a Fast-Track System

When it comes to security, MCP’s greatest strength—its rapid connectivity—quickly morphs into a glaring vulnerability that enterprises can ill afford to ignore. The protocol’s emphasis on speed over safeguards means it lacks the built-in protections necessary for safe operation in sensitive contexts. Nik Kale from Cisco Systems has voiced serious concerns about the unpredictability of AI agents accessing internal systems without clear boundaries, a scenario that invites exploitation by malicious actors. Without robust permission controls or data minimization features, MCP servers can inadvertently become open doors to critical infrastructure, putting entire networks at risk in a matter of moments.

Compounding this issue is the absence of governance mechanisms within MCP’s core architecture. Unlike more mature protocols that embed compliance and security as foundational elements, MCP leaves these crucial aspects as afterthoughts, to be patched by users post-deployment. This oversight is particularly troubling in industries where data breaches carry severe consequences, both legally and financially. Experts warn that the rush to implement often correlates directly with the speed of exploitation, a sobering reminder that simplicity can come at a steep cost. As enterprises adopt MCP for its ease, they must grapple with the reality that unchecked access by AI agents could lead to catastrophic breaches if not addressed proactively.

Crafting Defenses for a Vulnerable Protocol

To counter these inherent risks, industry leaders advocate for the integration of external security layers to fortify MCP servers before they go live. Proposals include adopting strict identity controls, such as On-Behalf-Of (OBO) token patterns, to ensure that AI actions align with specific user permissions rather than operating unchecked. Additionally, concepts like data minimization—limiting the information accessible to agents—have gained traction as a way to reduce exposure. These measures, while not native to MCP, are seen as vital stopgaps to prevent misuse and safeguard sensitive systems from unintended leaks or breaches.

Equally critical is the push for governance frameworks that can enforce compliance in regulated sectors. Without such structures, MCP deployments risk falling afoul of stringent data protection laws, a concern that looms large for industries like healthcare and finance. Solutions like centralized agent gateways are often floated as a means to impose order, acting as a checkpoint to monitor and regulate interactions between AI agents and external tools. Though these additions enhance security, they also introduce complexity, requiring enterprises to balance the need for protection with the desire for MCP’s original simplicity. This delicate dance underscores the broader challenge of adapting a lightweight protocol to the heavyweight demands of secure operation.

Confronting Scalability and Performance Barriers

Stumbling Blocks in Expanding Reach

As enterprises look to scale their AI operations, MCP’s limitations become painfully apparent, revealing a protocol unprepared for the rigors of large, distributed networks. Initially crafted for quick, point-to-point connections, MCP struggles to manage the intricate web of interactions that define multi-agent systems at scale. James Urquhart from Kamiwaza AI points to the inevitable bottlenecks that arise when numerous agents compete for resources, leading to traffic jams and inconsistent performance. This resource contention disrupts workflows, turning what was once a smooth connection into a frustrating bottleneck that hampers productivity.

Beyond mere congestion, the lack of inherent coordination mechanisms in MCP exacerbates these scaling woes. The protocol assumes instantaneous responses between agents—an unrealistic expectation as systems expand. Without built-in prioritization or queuing systems, enterprises face unpredictable behavior, where critical tasks may be delayed by less urgent ones. This systemic shortfall poses a significant barrier for organizations aiming to deploy MCP across broader operations, where reliability under pressure is not just preferred but essential. The result is a growing realization that scaling MCP requires more than just additional servers; it demands a fundamental rethink of how interactions are managed.

Seeking Solutions Through Structured Management

In response to these scalability challenges, experts propose the adoption of orchestration frameworks to impose order on MCP’s chaotic expansion. Scheduling mechanisms, for instance, can help prioritize tasks and allocate resources more effectively, preventing the gridlock that plagues unguided multi-agent systems. Similarly, organizing toolchains into job-specific “topics” allows for modular architectures that streamline interactions by focusing agents on defined roles. These approaches aim to transform MCP from a free-for-all into a coordinated ecosystem capable of handling enterprise demands without sacrificing performance.

Yet, implementing such solutions is not without its trade-offs, as each layer of orchestration adds operational overhead to a protocol once prized for its leanness. Enterprises must invest in additional infrastructure to manage these frameworks, a step that can strain budgets and complicate maintenance. Moreover, the process of curating tool access and refining agent scopes requires meticulous planning to avoid unintended bottlenecks or inefficiencies. While these strategies offer a path forward, they highlight a broader tension: the very fixes needed to make MCP scalable also erode some of the simplicity that made it attractive in the first place. Balancing these competing priorities remains a key puzzle for those committed to leveraging MCP at scale.

Building a Robust Future for MCP Implementations

Bridging the Testing-to-Production Divide

One of the most persistent challenges with MCP servers is the jarring disconnect between their performance in testing and their behavior under live conditions. In controlled environments, where variables are limited and traffic is predictable, MCP often delivers flawless results, giving developers a false sense of confidence. However, as Nuha Hashem from Cozmo AI notes, this reliability evaporates when servers face the unpredictable nature of real-world usage. Poorly scoped requests and vague access rules lead to erratic responses from AI agents, creating headaches for industries where precision is paramount, such as legal or medical sectors.

This testing-to-production gap demands a reevaluation of how MCP servers are prepared for deployment. Tightening the definition of agent tasks and establishing clearer boundaries on data access can mitigate the risk of unfocused outputs or unintended actions. Furthermore, simulating real-world stressors during testing phases can better expose potential weaknesses before they manifest in live settings. Addressing this divide is crucial for enterprises that rely on consistent AI-driven decision-making, ensuring that MCP’s initial promise doesn’t falter when it matters most. The lesson here is clear: thorough preparation beyond the lab is not optional but essential for success.

Fortifying MCP with Pragmatic Guardrails

Looking back, the journey of MCP implementation over recent years reflected a shared understanding among experts that the protocol, while groundbreaking, demanded significant bolstering to meet enterprise standards. The consensus was unmistakable: without deliberate enhancements, MCP’s simplicity could become its Achilles’ heel. Solutions like centralized agent gateways had been championed as vital tools to impose control, offering a structured entry point to monitor interactions. Meanwhile, granular controls such as limited tool access for LLMs and strict identity policies proved effective in curbing misuse and securing sensitive data against vulnerabilities.

Reflecting on those efforts, it became evident that the path forward rested on actionable steps for IT leaders and CIOs. Investing in robust orchestration layers to manage scalability, prioritizing security through external frameworks, and aligning MCP deployments with compliance mandates emerged as non-negotiable priorities. Additionally, fostering a culture of cautious adoption—guided by best practices and ongoing vigilance against emerging threats like malicious servers—offered a blueprint for harnessing MCP’s potential. These measures, though challenging, paved the way for a future where the protocol could evolve from a promising concept into a reliable cornerstone of AI integration.