Beyond the boardroom strategies and officially sanctioned platforms, a vast and unmonitored digital workforce of unapproved artificial intelligence models is quietly reshaping workflows and introducing unprecedented risk. Just as quickly as enterprises are racing to operationalize AI, this “shadow AI” is racing to outpace governance. The issue is no longer about a few rogue chatbots but about entire operational workflows being silently powered by unvetted models, vendor APIs, and autonomous agents that have never been through compliance checks. This unseen activity creates significant vulnerabilities, from sensitive data exposure and algorithmic bias to sudden reputational harm when a clandestine experiment goes public before anyone in leadership even knows it exists. The solution, however, is not to discourage or slow the adoption of AI. Instead, the focus must shift toward making responsible, approved practices as easy and automatic as the shadow alternatives that employees turn to when the official path feels too slow and cumbersome.

When the Unseen Becomes the Unsafe

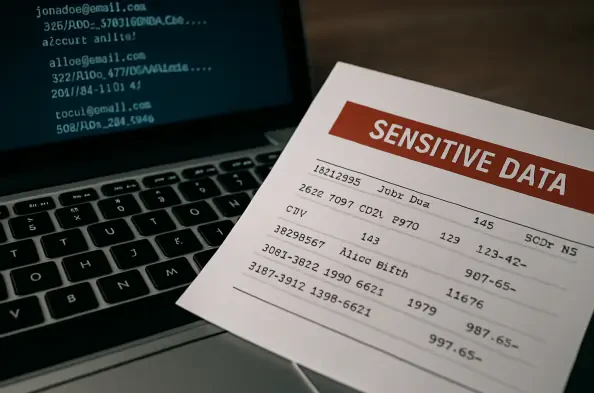

The proliferation of unvetted AI within an organization represents a fundamental disconnect between the drive for innovation and the mechanisms in place to manage it. This is not merely about employees using public generative AI tools for simple tasks; it extends to the integration of complex, unapproved APIs into critical business software and the deployment of autonomous agents to handle sensitive customer interactions. These tools operate outside the purview of IT and security teams, creating a parallel digital infrastructure that is invisible to standard monitoring and control systems. Consequently, the organization loses visibility into how its data is being used, where it is being sent, and what decisions are being made on its behalf.

The risks stemming from this lack of oversight are both immediate and profound. Sensitive company information, including customer records, financial data, and proprietary trade secrets, can be inadvertently fed into third-party models with uncertain data retention and privacy policies, leading to irreversible exposure. Furthermore, unvetted algorithms, particularly those used in areas like human resources or marketing, can perpetuate and amplify hidden biases, resulting in discriminatory outcomes that damage the company’s reputation and invite legal challenges. For leadership, this presents a critical crossroads: how to harness the immense productivity gains promised by AI without sacrificing the essential principles of security, ethics, and corporate responsibility.

The Numbers Behind the Narrative

Recent data illuminates the staggering scale of this challenge, moving it from anecdotal concern to a documented, widespread phenomenon. A comprehensive survey from Cybernews revealed that nearly 60% of employees regularly use unapproved AI tools at work. While many of these individuals acknowledge the potential risks involved, the immediate benefits of efficiency and problem-solving often outweigh abstract policy compliance. This trend persists even in organizations that provide official AI solutions, as nearly half of the respondents reported that their company-approved tools failed to fully meet their on-the-job needs, pushing them toward more capable, albeit unsanctioned, alternatives.

The security implications of this behavior are stark and measurable. Shadow AI incidents are now estimated to account for 20% of all data breaches, transforming what might seem like minor policy infractions into major enterprise risk vectors. Compounding this, 27% of organizations report that over a third of the data processed by their various AI systems contains sensitive or private information. This brings to light a manager’s paradox: the same Cybernews survey found that most direct managers are either aware of or tacitly approve the use of shadow AI by their teams. This indicates a systemic issue where the pressure to deliver results forces a pragmatic, yet risky, circumvention of official policy, suggesting that the governance model itself—not the employees—is what needs correction.

A Blueprint for AI Powered Governance

To effectively combat the rise of shadow AI, the core principle must be to make the approved path as fast and frictionless as the unsanctioned alternative. The only realistic way forward is to build a governance framework that is not a bureaucratic hurdle but a streamlined enabler. This involves using the very technology being governed to automate and accelerate the approval process itself. A modern governance model strikes a crucial balance: maintaining enough rigor to mitigate key risks while being seamless enough to encourage broad engagement from all business units.

This begins with automating the first line of defense. By deploying an AI-driven assessment tool, organizations can pre-screen new projects and vendor tools with remarkable speed. Teams can simply submit a proposal, a document, or even just the URL of a third-party service, and the system can automatically execute a risk-analysis workflow. This workflow flags common risk categories—such as data sensitivity, model bias, security posture, and duplication of existing efforts—and assigns a preliminary risk ranking. This allows a human governance committee to focus its attention on strategic value and nuanced judgment calls rather than getting bogged down in repetitive, manual assessments.

Lowering the barrier to entry for business units is equally critical. The submission process should be intuitive, allowing innovators to upload whatever artifact they have on hand, be it an email draft, a presentation, or a link to a blog post, without needing to draft a massive formal project charter for an initial review. To complement this, asset discovery tools and real-time monitoring can provide comprehensive visibility into all AI usage across the enterprise, creating an accurate inventory that turns shadow systems into known assets. Finally, embedding a risk-based approval model ensures that not every project faces the same level of scrutiny. A low-risk internal HR assistant can be fast-tracked through an automated approval lane, while a high-risk autonomous agent for hiring decisions is routed for deep, expert human review.

Transforming Governance from Gatekeeper to Enabler

Ultimately, a successful AI strategy requires a profound cultural shift in how governance is perceived. It must cease to be seen as a restrictive gatekeeper and instead be repositioned as a strategic enabler that provides safe, clearly marked lanes for rapid innovation. When governance processes are overly restrictive, slow, or opaque, they have the unintended consequence of pushing employees toward shadow solutions in the name of productivity, thereby exacerbating the very risks the policies were designed to prevent.

A proactive approach is essential. This involves not only sanctioning a library of approved, vetted AI tools but also establishing a transparent and efficient process for evaluating new ones. When an employee’s request is met with a clear, fast, and supportive review, the incentive to go rogue diminishes significantly. If a tool is denied, the reasons should be communicated transparently, and alternative, safer solutions should be explored collaboratively. When the official path becomes the path of least resistance, it naturally becomes the preferred choice.

Without a centralized and streamlined governance model, AI tools emerged in the shadows, leading to heightened risk, compliance blind spots, and missed opportunities for responsible scaling. The path forward required not a heavy-handed crackdown on employees but the implementation of easier, faster, and more comprehensive methods to assess and manage risk. The most effective solution was found by using AI itself to create a governance framework that was intelligent, responsive, and, above all, an accelerator of innovation rather than an obstacle to it.