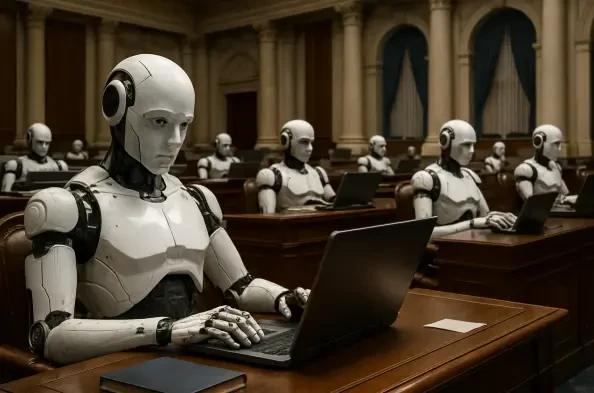

The rapid expansion of agentic artificial intelligence has created a critical governance deficit that is silently growing within enterprises, where autonomous systems are being deployed far faster than the frameworks designed to control them. As organizations rush to integrate these powerful new capabilities, the essential pillars of lifecycle management, transparent observability, and robust security are often treated as afterthoughts, relegated to a “to-do” list for a future that may never come. This reactive approach is more than a simple technical oversight; it represents a disaster in the making. The autonomous and continuously evolving nature of AI agents introduces significant and novel risks of uncontrolled proliferation, technological obsolescence, hidden security vulnerabilities, and exponentially escalating costs. Left unchecked, this trend is leading to a frightening accumulation of what can only be described as “agentic tech debt.” A proactive, structured, and strategic approach to AI agent management is therefore no longer an optional best practice but has become an essential component of corporate survival.

The Widening Gap Between Adoption and Control

The chasm between the speed of agentic AI adoption and the implementation of corresponding governance frameworks is growing at an alarming rate, creating a high-stakes environment where innovation far outpaces oversight. An MIT report starkly illustrates this trend, revealing that 35% of companies have already adopted agentic AI, with an additional 44% planning to do so imminently. While technology experts and strategic advisors strongly recommend building a centralized governance infrastructure before a full-scale deployment, the reality on the ground is starkly different. Driven by a perceived “race for survival” in a competitive market, most organizations are prioritizing speed and immediate business outcomes over the methodical, structured oversight required to manage such a transformative technology. This frantic pace causes governance to lag dangerously behind the very systems it is meant to control, leaving businesses increasingly exposed to risks they do not fully comprehend.

This reactive posture creates significant and often invisible blind spots, even within companies that have some form of governance in place. Existing systems are frequently too narrow in their scope, focusing exclusively on large, formally sanctioned, IT-driven projects while completely missing the myriad of smaller or department-led initiatives that bubble up organically across the enterprise. Furthermore, the attention of these limited governance structures tends to be fixated on immediate, operational concerns such as the accuracy, security, and compliance of currently active agents. This myopic focus completely neglects the critical backend of the agent lifecycle, such as establishing clear criteria for identifying agent obsolescence or creating formal processes for decommissioning tools that are no longer needed or effective. The excitement of building the next new thing consistently overshadows the less glamorous but essential work of maintaining and retiring the old, resulting in a profound and dangerous lack of rigor in long-term technology management.

The Rise of Unseen Risks and Shadow AI

The governance challenge is massively compounded by the widespread and often untracked proliferation of AI agents from an increasingly diverse range of sources, turning the corporate technology stack into a complex and opaque ecosystem. Agentic AI is no longer the exclusive domain of data science or IT departments; instead, it is becoming a pervasive, underlying layer of enterprise technology. These agents are emerging from multiple vectors simultaneously, including enterprise software vendors, who are embedding AI assistants into most applications, and SaaS platforms like Salesforce, which are integrating agentic capabilities directly into their core offerings. Moreover, do-it-yourself automation tools like Zapier and even standard web browsers are making agent creation readily accessible to all users. A significant driver of this uncontrolled spread is the empowerment of non-technical “citizen developers” to create their own agents, a practice that an EY survey reveals is permitted by a staggering two-thirds of companies.

This decentralization of development is fueling the dangerous phenomenon of “shadow AI,” where employees use unsanctioned tools to solve immediate business problems. An IBM survey found that while a remarkable 80% of office workers use AI, only 22% exclusively use employer-sanctioned tools, creating a vast and unmonitored “shadow AI agent gap.” This is not merely anecdotal; network traffic analysis from security firms provides concrete evidence of this trend, showing users in two-thirds of organizations downloading resources from open AI-sharing sites and making direct API calls to large language models from providers like OpenAI and Anthropic. This unsanctioned usage, often double what companies self-report, creates an invisible and expanding risk landscape. These shadow agents, however well-intentioned, still require access to sensitive company data and systems, thereby creating new, unmonitored attack surfaces for malicious actors to exploit.

The Perils of an Unmanaged Agent Lifecycle

Unlike traditional software, AI agents possess a dynamic and potentially problematic lifecycle that introduces unprecedented management challenges. They are not static tools but complex systems that can continuously learn, evolve their behavior, and progressively gain access to more systems over time. This new reality demands a complete paradigm shift in lifecycle management, one for which most organizations are dangerously unprepared. A core problem is the inherent lack of built-in expiration; agents are typically deployed without clear criteria for retirement, and SaaS providers have a direct commercial incentive to keep their agents active indefinitely. This operational reality fosters a “set it and forget it” mentality, where autonomous systems are unleashed into the corporate environment with little to no long-term planning for their maintenance, evolution, or eventual decommissioning.

In the absence of proactive oversight, the default management strategy inevitably becomes a reactive one, often characterized as “management by disaster.” Under this model, agents are only reviewed or retired after something has gone visibly and often publicly wrong. This leaves countless underperforming, irrelevant, or obsolete agents operating entirely under the radar. These “zombie agents” can manifest in two primary ways: either as failed pilot projects that were never formally shut down and continue to consume resources, or as once-active agents whose performance has quietly degraded over time due to shifts in data, business processes, or the external environment. As these forgotten agents continue to operate and make decisions based on outdated models or information, they accumulate significant organizational debt, posing a latent threat that is analogous to running critical systems on old, unmaintained code.

A Proactive Blueprint for Effective Governance

In stark contrast to the widespread lack of governance, highly regulated industries offer a potential blueprint for success, where the high stakes have necessitated a more disciplined and forward-thinking approach. The financial services sector, for instance, provides a compelling case study in proactive management. One strategy, championed by industry leaders, is founded on the core principle that “an agent is an application.” By treating AI agents with the same rigor as traditional software, companies can extend their mature Application Lifecycle Management (ALM) processes to cover these new systems. This methodology ensures that every AI use case is rigorously vetted from its inception for regulatory compliance, ethical considerations, and performance standards that must be maintained throughout its entire lifecycle, from development to retirement.

This disciplined model included several key components for maintaining comprehensive control and visibility. A centralized “AI agent marketplace” was created to serve as a complete and authoritative inventory, providing clear insight into what agents existed, how they were being used, and which versions were currently active across the organization. Crucially, this repository also included the technical capability to gracefully sunset agents that were no longer needed or had become obsolete. Furthermore, all models were subjected to continuous testing and monitoring by specialized orchestrator agents, with mandatory human validation points built into their workflows. This multi-layered oversight ensured that agents stayed within their designated tasks and prevented runaway costs from useless or rogue operations, transforming a potential source of chaos into a well-managed corporate asset.

To navigate this complex landscape, organizations that succeeded in harnessing agentic AI without succumbing to chaos took decisive, proactive steps. Before any agent was deployed, business and technology leaders collaborated to define the specific metrics and performance thresholds that would automatically trigger a review for either retraining or full retirement. They instituted a culture of regular audits, treating their AI portfolio like any other critical business asset with scheduled quarterly reviews. For experimental pilot projects, formal “stage gates” were implemented, creating clear milestones to rigorously assess progress and granting the organizational permission to “fail fast” and terminate initiatives that were not delivering value. Finally, they addressed the challenge of shadow AI not with an iron fist, but with a strategic understanding of its root causes—typically ignorance and bureaucracy—by making the sanctioned path for AI development and deployment more accessible, transparent, and user-friendly than the unsanctioned alternatives.