Far from the spotlight that shines on the creation of colossal AI models, the silent, relentless work of inference is where artificial intelligence truly comes to life and delivers its revolutionary promise. While the development of these models captures headlines, the real revolution happens during inference—the operational phase where AI applies its knowledge to new data, delivering tangible value. As artificial intelligence integrates into every facet of business, from customer service to critical infrastructure management, the servers that power this process are becoming a vital strategic asset. This analysis examines the explosive growth, core challenges, and future trajectory of AI inference servers, providing a roadmap for navigating this essential technology trend.

The Surge of AI Inference Market Dynamics and Adoption

Unpacking the Explosive Market Growth

The financial scale of the shift toward operational AI is staggering. The AI inference server market is forecasted to skyrocket from an estimated $38 billion in 2026 to a projected $133.2 billion by 2034, a compound growth that signals a massive and fundamental realignment of IT infrastructure priorities. This is not merely an incremental increase in spending; it represents a strategic pivot where budgets are being reallocated from experimental projects to production-grade systems designed for continuous, real-time AI delivery. This surge underscores a market-wide recognition that the ultimate return on investment in AI is realized not when a model is trained, but when it is successfully and efficiently deployed at scale.

This exponential growth is fueled by an escalating demand for real-time AI applications that are becoming central to modern business operations. The digital economy runs on immediacy, and applications involving generative AI, intelligent automation, and data-driven decision-making tools all require low-latency, high-throughput processing to be effective. Whether it is an AI agent providing instant customer support or a financial system detecting fraud in milliseconds, the underlying requirement is an inference engine that can process new information and deliver a conclusion without delay. Consequently, organizations are moving beyond proof-of-concept models and are now architecting their core systems around this powerful new capability.

This transition is profoundly altering the landscape of computational workloads. Historically, the immense cost and complexity of AI were associated with model training. However, the balance is rapidly shifting. As more models move from development into operational deployment, inference now represents a significant and steadily growing portion of total AI computational costs. For a widely used service, the cumulative cost of running inference millions or billions of times can easily eclipse the initial, one-time cost of training the model. This economic reality is forcing enterprises to optimize their inference strategies to ensure both performance and financial sustainability.

Real World Applications Powering the Trend

Perhaps the most visible driver of the inference boom is the widespread adoption of generative AI and large language models (LLMs). The ubiquitous chatbots in customer service, the sophisticated tools that assist in content creation, and the intelligent co-pilots integrated into software development environments all rely entirely on efficient and scalable inference capabilities. Each user query or prompt triggers an inference process, where the LLM analyzes the input and generates a relevant, coherent response. The quality of the user experience—its speed, accuracy, and relevance—is a direct function of the performance of the underlying inference server, making it a cornerstone of the modern application stack.

Beyond consumer-facing applications, businesses are deploying AI inference to automate and optimize their most complex internal processes. In the realm of cybersecurity, inference engines continuously analyze network traffic to detect anomalies that may indicate a sophisticated threat, providing a level of vigilance that is impossible to achieve with human oversight alone. Similarly, in manufacturing, sensors on machinery feed data to inference models that perform predictive maintenance, identifying potential equipment failures before they occur and preventing costly downtime. In these scenarios, the ability to instantly analyze new data is not just an advantage; it is a critical operational necessity.

The impact of AI inference is also transforming entire industries with highly specialized use cases. In healthcare, for example, inference engines are becoming indispensable tools for medical professionals. They can analyze medical imaging in real time, highlighting potential areas of concern for a radiologist, or process streams of patient data to flag early indicators of disease. In the high-stakes world of finance, these systems power algorithmic trading platforms that execute transactions in microseconds and run sophisticated fraud detection systems that protect consumers and institutions from financial crime. In each case, AI inference is not an auxiliary tool but a core component of the operational workflow.

Expert Perspectives on Key Strategic Decisions

The Foundational Challenges Cost Complexity and Security

Despite its immense potential, deploying AI inference at scale is fraught with significant challenges, starting with its prohibitive cost and resource intensity. Experts warn that delivering low-latency inference demands immense computational power, which translates directly into a need for specialized and expensive hardware, most notably high-end GPUs. Beyond the initial capital expenditure, running these systems incurs substantial operational costs related to power, cooling, and the maintenance of robust data pipelines. These costs can be unpredictable and difficult to manage, especially as user demand for AI services fluctuates, making cost optimization a paramount concern for any organization.

Adding to the financial burden is the sheer complexity of the required infrastructure and a corresponding shortage of skilled professionals. Successfully deploying and managing inference servers requires a sophisticated and multilayered stack of technologies. This includes not only the hardware itself but also advanced software for orchestration, networking, storage, and performance monitoring. Integrating these components into a resilient, high-performance system demands deep expertise in fields like hardware engineering, MLOps, and DevOps—a combination of skills that is currently in short supply. A misconfiguration anywhere in this complex chain can create performance bottlenecks, degrade the user experience, and undermine the entire AI initiative.

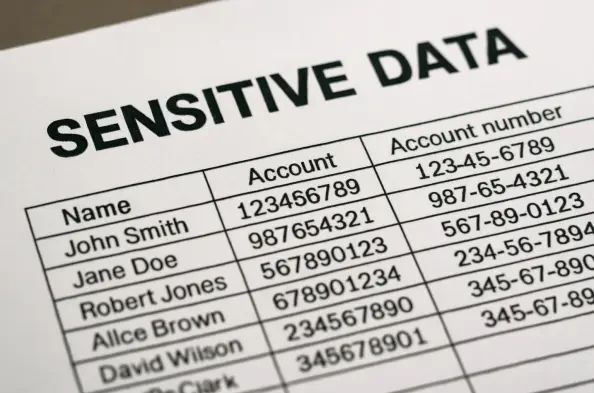

Furthermore, as inference processes become more distributed and handle increasingly sensitive data, they introduce elevated security and privacy risks. These systems often process proprietary business information or personal customer data, sometimes at the network edge where traditional security perimeters are less effective. This expanded attack surface creates new vulnerabilities that malicious actors can exploit. Industry leaders emphasize that security cannot be an afterthought; it requires a proactive, defense-in-depth strategy. This means integrating robust security controls at every level of the infrastructure—from the hardware up to the application layer—to mitigate risks, protect sensitive information, and ensure compliance with stringent data privacy regulations.

The Strategic Crossroads Build Buy or Subscribe

Faced with these challenges, organizations find themselves at a strategic crossroads with three distinct paths for acquiring inference capabilities: building a custom solution, subscribing to a managed service, or buying a pre-built commercial server. The “build” or do-it-yourself approach involves assembling a bespoke solution using open-source tools. This pathway offers maximum control and flexibility, allowing an organization to tailor every component to its specific needs. A popular open-source library in this space is vLLM, prized for its highly efficient memory management techniques like PagedAttention and its advanced processing capabilities. However, this path is the most demanding, requiring significant in-house expertise, substantial financial resources, and a long-term commitment to ongoing maintenance, security patching, and operational management.

In contrast, the “subscribe” approach offers the convenience of Inference-as-a-Service (IaaS), where a third-party cloud provider handles all the complexities of deployment, scaling, and management. This model enables rapid deployment and can be an attractive option for organizations looking to experiment without a large upfront investment. However, this convenience comes with considerable trade-offs. Handing over sensitive data to an external provider introduces significant data privacy and security risks. Moreover, organizations face the danger of vendor lock-in, unpredictable and often escalating costs, and potential performance bottlenecks due to network latency. For businesses in highly regulated industries, data sovereignty and compliance requirements may render this option entirely unviable.

A third option, “buy,” is presented as a balanced, enterprise-ready approach that combines the strengths of the other two models. Purchasing a commercial, pre-built inference server provides the control and data security of a self-hosted solution with the operational ease and support of a managed service. When evaluating this option, experts advise prioritizing platforms that are fundamentally open, secure, and performance-optimized. An ideal solution should be model-agnostic and hardware-agnostic, capable of running seamlessly across any cloud or on-premises environment to avoid lock-in. It must also incorporate robust security features, advanced performance optimization techniques like those found in vLLM, and support for distributed inference with technologies such as llm-d to ensure it can scale efficiently to meet future demands.

The Future of AI Inference Evolving Architectures and Implications

Technological Horizons and Architectural Shifts

The future of AI inference will be defined by a relentless pursuit of efficiency and optimization across both software and hardware. As models become larger and more complex, the cost of running them threatens to become unsustainable. To counteract this, innovations that maximize performance while minimizing resource consumption will become standard. Advanced memory management techniques like PagedAttention, which dramatically improve GPU memory utilization, and sophisticated distributed inference frameworks like llm-d, which allow a single model to run across multiple servers, will be essential for making large-scale AI economically feasible. The next wave of progress will emerge from the co-design of hardware and software, purpose-built to execute inference workloads with maximum efficiency.

Simultaneously, the market is trending decisively toward open and agnostic platforms. Early adopters who tied their AI strategy to a specific cloud provider or proprietary hardware are now recognizing the risks of vendor lock-in. To maintain strategic flexibility and future-proof their investments, organizations are increasingly demanding inference platforms that are model-agnostic, hardware-agnostic, and location-agnostic. The ability to run any model on any accelerator in any environment—be it a public cloud, a private data center, or a hybrid combination—is becoming a non-negotiable requirement. This shift empowers businesses to choose the best tools for the job without being constrained by the ecosystem of a single vendor.

Another significant architectural evolution is the push to move inference processing closer to where data is generated. This trend, known as inference at the edge, involves running AI models on local servers or directly on edge devices rather than sending data to a centralized cloud. This approach offers several compelling advantages. It dramatically reduces latency, which is critical for applications requiring instantaneous responses, such as autonomous vehicles or industrial robotics. It also enhances privacy and security by keeping sensitive data on-premises. As edge computing capabilities become more powerful, this decentralized model will unlock a new class of real-time, context-aware applications that are simply not possible with a cloud-centric architecture.

Broader Implications for Business and Technology

Ultimately, a well-architected inference strategy will be the primary enabler of intelligent, real-time, and scalable decision-making across the enterprise. When executed correctly, it transforms AI from a promising but siloed technology into a core business capability that is deeply integrated into operational workflows. The ability to instantly analyze new data and generate actionable insights will create a significant competitive advantage, allowing organizations to respond more quickly to market changes, optimize processes with unprecedented precision, and create more personalized and responsive customer experiences. Inference is the bridge that carries AI from the lab to the front lines of business.

However, as AI becomes more powerful and autonomous, the primary challenge will shift toward ensuring trust and accountability. For AI systems to be widely adopted in high-stakes environments, they must be transparent, reliable, and fair. This requires building systems with robust governance frameworks, model explainability features that can articulate the reasoning behind a decision, and mechanisms for bias detection and mitigation. Creating transparent and comprehensive audit trails will also be essential for meeting evolving regulatory demands and maintaining the confidence of users and customers. The long-term success of AI will depend as much on building trust as it does on improving technical performance.

In the long run, the intense focus on inference will fundamentally reshape how software is developed and operated. Intelligent features and autonomous AI agents will cease to be novel additions and will instead become standard components of the modern application stack. This new paradigm will require a resilient, scalable, and secure inference backbone to power these intelligent capabilities across the entire software ecosystem. The infrastructure that supports inference will become as foundational to the next generation of applications as the database and the web server were to previous generations, heralding a new era of truly intelligent software.

Conclusion From Analysis to Action

The analysis revealed that the AI inference server market was undergoing explosive growth, a trend driven by a surge in real-world applications that deliver tangible business value. However, it also became clear that navigating this trend required overcoming significant challenges related to high costs, infrastructure complexity, and critical security vulnerabilities. Success depended on making strategic decisions about how to procure these capabilities, weighing the trade-offs between building a custom solution, buying a pre-built server, or subscribing to a managed service.

Ultimately, an intentional and well-architected inferencing strategy is no longer a mere technical detail—it is a critical determinant of success for any enterprise AI initiative. The path forward for organizations is to move from analysis to action. The most effective approach is to begin experimenting with a chosen pathway, whether it is build, buy, or subscribe, and to learn from the results and iterate rapidly. These decisions should be anchored in foundational principles of openness, security, and long-term scalability to ensure that today’s infrastructure can support tomorrow’s innovations.