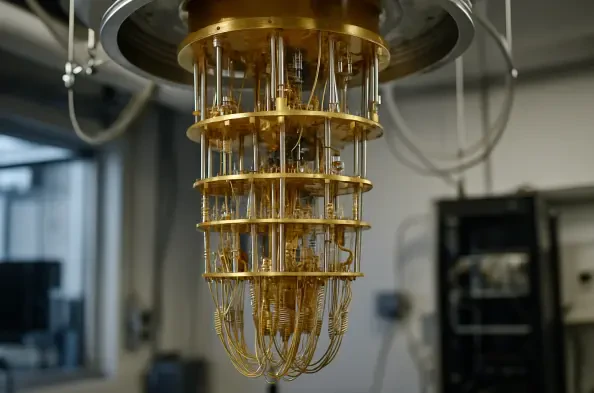

The year 2025 has firmly established itself as a critical inflection point for quantum technology, a period where a convergence of hardware innovations has begun to close the gap between theoretical potential and tangible, real-world utility. Across the globe, an accelerated pace of development within corporate labs, academic institutions, and government-backed initiatives is systematically dismantling the foundational barriers to scalable quantum computing. This collective progress points toward an overarching trend: the groundwork for practical, fault-tolerant systems is not only being laid but is solidifying at a rate few predicted. The primary forces behind this transformation include a fundamental shift in system architecture toward modular and networked designs, a successful and concerted assault on qubit error rates, and key innovations that are making quantum hardware more accessible for widespread use. These advancements, supported by a burgeoning global ecosystem and fueled by intense international competition, are positioning quantum power to become an indispensable component of the world’s computational toolkit in the near future.

The Paradigm Shift in Scalability: Embracing Modular and Networked Architectures

One of the most profound strategic shifts in the quantum landscape is the deliberate pivot away from designing monolithic supercomputers in favor of distributed, modular systems. This architectural approach mirrors the logic of classical cloud computing by linking multiple smaller, high-quality quantum processors to function as a single, more powerful computational entity. By doing so, researchers can circumvent the immense engineering challenge of manufacturing a single, massive, and flawless quantum chip, a task fraught with complexity and low yields. A landmark simulation study conducted by IonQ in collaboration with the University of California, Riverside, provided compelling evidence for this model’s viability. Their work demonstrated that a network of interconnected smaller quantum computers can effectively outperform a larger, standalone machine. Critically, these findings also revealed that the networked architecture is remarkably robust, maintaining high performance even when individual hardware components possess inherent imperfections, thus significantly lowering the barrier to achieving scalable quantum advantage.

This architectural revolution is being propelled by the parallel development of essential interconnect technologies designed to bridge the gap between separate quantum processors. Researchers at MIT, for instance, have engineered a pioneering device that enables direct, high-fidelity quantum communication between different chips, a cornerstone of the modular vision. This technology makes remote entanglement—the ability to inextricably link the quantum states of qubits located on physically separate processors—a practical reality. By overcoming the stringent physical limitations inherent in single-chip systems, such innovations are paving the way for the construction of expansive, distributed quantum networks. These networks can be scaled up more easily over time by simply adding new processor modules, much like adding servers to a data center. This fundamental change in thinking, from a single, centralized “quantum brain” to a distributed network of quantum nodes, is profoundly reshaping the industry’s roadmap for achieving large-scale quantum computation.

Conquering a Core Challenge: Breakthroughs in Error Correction and Fidelity

In parallel with the architectural advances, significant progress has been made in tackling the most persistent obstacle in quantum computing: the fragility of quantum states and the resulting computational errors caused by quantum decoherence. A major highlight in this domain is the creation of a silicon-based atomic quantum processor that scientists claim is the most accurate built to date. As detailed in recent publications, this new chip has achieved a record-breaking 99.99% fidelity in its operations. This near-perfect accuracy represents a monumental step toward building reliable quantum systems that can perform complex calculations far beyond the reach of even the most powerful classical supercomputers. High fidelity is the bedrock upon which fault-tolerant quantum computing is built, as it dramatically reduces the overhead required for error correction schemes, bringing practical applications closer to reality.

The global pursuit of fault tolerance is a fiercely competitive endeavor, with multiple entities reporting substantial progress. IBM has made notable strides with its new generation of quantum processors, which feature dramatically improved qubit stability and coherence times. These hardware enhancements are a key component of the company’s ambitious roadmap, which targets the demonstration of quantum advantage this year and aims for a fully fault-tolerant system by 2029. Underscoring the international nature of this race, China’s Zuchongzhi 3.2 quantum computer successfully reached the fault-tolerance threshold, a first for any team outside the United States. This achievement was accomplished using an innovative and highly efficient microwave-based control approach, demonstrating that a diversity of viable technological pathways exists for building robust, error-corrected quantum systems.

Furthermore, the synergy between hardware and software has emerged as a critical catalyst for progress in mitigating errors. Google’s “Quantum Echoes” algorithm represents a landmark software breakthrough in this area, providing a verifiable method for demonstrating quantum advantage on today’s noisy, intermediate-scale quantum (NISQ) devices. This sophisticated algorithm compensates for existing hardware limitations by “echoing” quantum states more efficiently, a process that both stabilizes fragile qubits and enables unprecedented levels of scientific analysis on near-term hardware. This software-driven approach, combined with hardware improvements like those seen in Google’s Willow chip, which was noted for its significant error-reduction capabilities, showcases how integrated hardware and software solutions are mutually reinforcing each other to accelerate the arrival of a truly useful quantum age.

Enhancing Accessibility and Practicality: Room-Temperature Operations and Miniaturization

A truly game-changing trend is the development of quantum hardware capable of operating without the need for extreme cryogenic cooling. Traditionally, most quantum systems must be maintained at temperatures colder than deep space to protect delicate quantum states, a requirement that necessitates large, expensive, and power-hungry refrigeration infrastructure. This has been a major logistical and economic barrier to widespread adoption. However, scientists at Stanford University have shattered this limitation by creating a tiny optical device capable of entangling photons with electrons at room temperature. This breakthrough, which utilizes a unique property of light known as “twisted light” within a two-dimensional material called molybdenum diselenide, effectively eliminates one of the most significant obstacles to practical quantum technology. By making quantum operations feasible in more conventional settings, this innovation makes the technology far more practical for integration into a diverse array of environments beyond specialized labs.

In a similar vein, the drive toward miniaturization is paving the way for the mass production of quantum machines, moving them from bespoke laboratory prototypes to accessible, commercially manufactured devices. A key innovation is a newly developed microchip-sized device that controls laser frequencies with extraordinary precision while consuming significantly less power than traditional systems. Crucially, it is manufactured using standard fabrication techniques, a vital step for enabling scalable production. Further contributing to this trend is a prototype entanglement source chip developed through a collaboration between Cisco and UC Santa Barbara. This chip excels at efficiently generating the entangled photons that are the fundamental building blocks of quantum networks and secure communications. By streamlining this core process, the chip could potentially accelerate the timeline for practical quantum computing by nearly a decade. These advancements in operational efficiency and compact design are converging to make quantum technology more affordable and powerful.

The Thriving Ecosystem: Investment, Applications, and Future Trajectories

The rapid pace of hardware innovation has been propelled by an increasingly robust and expanding global ecosystem. A massive influx of private capital, combined with substantial government support, has cultivated a fiercely competitive environment, with a recent analysis identifying 76 major companies actively driving progress. This dynamic landscape has encouraged industry leaders like IBM and IonQ to place significant strategic bets on specific qubit architectures, accelerating development within those domains. The positive outlook is echoed by initiatives such as the DARPA Quantum Benchmarking Initiative, which conducted a thorough review of the field and concluded that despite the remaining obstacles, the eventual success of quantum computing is now considered “more likely than not.” This growing confidence has permeated the entire sector, attracting further talent and investment.

As the hardware matured, the timeline for tangible applications moved from a distant horizon into a five-to-ten-year commercialization window. Fields such as healthcare and materials science stood poised for revolutionary change, as quantum simulations running on high-fidelity processors would allow for the modeling of molecular interactions at an unprecedented scale. This capability promised to dramatically speed up drug discovery and enable the design of novel materials, including new alloys and more efficient batteries. Optimization problems in logistics, transportation, and finance also stood to be transformed by quantum algorithms capable of solving complex logistical challenges. While significant challenges related to scaling systems and integrating quantum processors with classical infrastructure remained, the momentum achieved was undeniable. The advances in linked systems and expanded cloud-based access had solidified quantum’s role as a transformative technology poised to reshape the computational landscape for decades to come.