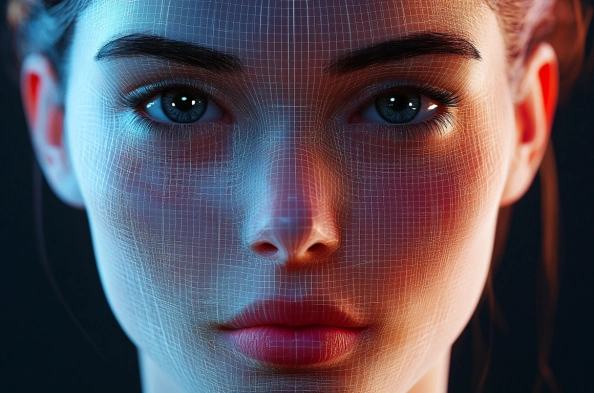

In the current digital era, technological advancements are occurring at an unprecedented pace, making it crucial for users to adapt swiftly to these changes. Cybersecurity specialists are constantly emphasizing the importance of cautious browsing and the use of robust antivirus software to protect users from potential cyber threats. Specifically, Avast Free Antivirus is recommended for its effectiveness in safeguarding against malicious attacks. A concerning development in recent technology is the rise of deepfakes—manipulated media generated by artificial intelligence that uses the likenesses of notable figures, including celebrities and politicians. These sophisticated alterations often involve both the visual and auditory imitation of public figures, which can mislead and deceive the audience.

The Evolution of Deepfake Technology

From Face-Swapping to Voice Synthesis

Deepfakes initially focused on face-swapping, where a celebrity’s face is superimposed onto another person’s body in a video. However, the incorporation of voice synthesis technology has made these deepfakes even more convincing by allowing them to replicate a celebrity’s voice accurately. This dual manipulation of visual and audio elements significantly increases the difficulty of detecting these fabrications. The sheer level of realism achieved by combining both face-swapping and voice synthesis has elevated the threat posed by deepfakes, making them a potent tool for malicious intents.

What began as amateur efforts in face-swapping has now evolved into sophisticated forgeries that are challenging to distinguish from genuine videos. As the technology advances, it becomes simpler for less technically skilled individuals to produce high-quality deepfakes using widely available software. This proliferation means that the volume of deepfakes in circulation is likely to rise, increasing the chances of these forgeries being used for harmful purposes. Protecting against this growing threat requires staying ahead of the technology and constantly developing new detection techniques.

The Role of GANs and Deep Learning

The emergence and enhancement of deepfakes in recent years have largely been driven by advances in Generative Adversarial Networks (GANs) and deep learning algorithms. These technologies enable the creation of highly realistic, yet entirely fabricated, video and audio content. Generative Adversarial Networks work by having two neural networks – the generator and the discriminator – compete against each other, resulting in increasingly realistic outputs as they improve over multiple iterations. This process has been instrumental in refining the quality of deepfakes to the point where they are nearly indistinguishable from authentic media.

Deep learning algorithms, in conjunction with GANs, have equipped creators with the tools needed to produce seamless and convincing deepfakes. These developments have made it easier for anyone with a computer and internet access to generate fabricated media, thus amplifying the potential reach of malicious actors. The sophistication of these tools has made it easier for malicious actors to create convincing deepfakes, which can be used to manipulate public perception and spread disinformation on a large scale. The challenge hence lies in finding ways to counter this misuse through technological and legal measures.

The Potential for Misuse

Threats to Public Figures

While some might consider deepfakes as a form of entertainment, their potential for misuse and exploitation is substantial. Malicious actors can use deepfake technology for various nefarious purposes, such as tarnishing a celebrity’s reputation, spreading disinformation, committing extortion, or engaging in cyberbullying. Such actions not only harm the individuals involved but also erode public trust and the overall perception of digital media. Public figures, in particular, are vulnerable targets because their widespread recognition and influence make them ideal subjects for convincing deepfakes that can reach and mislead large audiences.

The threat to public figures is not merely hypothetical; there have been several high-profile cases where deepfakes have been used to discredit politicians or spread false information. These incidents highlight the potential of deepfakes to cause real-world harm and disrupt the political landscape. Furthermore, the legal avenues for recourse are still developing, leaving victims with limited options for redress. The psychological toll on individuals subjected to deepfake attacks can be immense, as they may suffer from public shaming, invasion of privacy, and emotional distress. Ultimately, the misuse of deepfake technology against public figures undermines the integrity of public discourse and trust in media.

Impact on Public Trust

The widespread availability and use of deepfake technology can lead to a significant erosion of public trust. As deepfakes become more sophisticated, it becomes increasingly difficult for the average person to distinguish between real and fake content. This can lead to a general skepticism toward all digital media, undermining the credibility of legitimate news sources and public figures. When people lose faith in the authenticity of digital content, it paves the way for a post-truth era where misinformation can flourish unchecked.

The erosion of trust has far-reaching implications, affecting not only individual reputations but also the stability of social and political institutions. Deepfakes capitalize on the natural human tendency to believe what they see and hear, making it challenging for individuals to navigate the digital information landscape accurately. This blurring of reality and fiction can seed doubt and confusion, destabilizing the social fabric and making it easier for malicious actors to push their agendas. Therefore, restoring and maintaining trust in digital media is crucial to preserving informed decision-making and public confidence in media sources.

Detecting Deepfakes

Visual Indicators

Recognizing deepfakes involves scrutinizing several aspects of the video and audio content. A thorough examination of facial characteristics, such as inconsistencies in skin texture and aging, can help identify potential deepfakes. Observing the shadows and lighting on the face, as well as the reflectivity and glare angles on glasses, can also provide clues. For example, deepfakes may fail to accurately replicate how light interacts with facial features, resulting in unnatural shadow placements or inconsistent reflections.

Details such as blinking patterns, the smoothness of the skin, and the alignment of facial features can serve as indicators of manipulation. Deepfakes may exhibit unusual or mechanical blinking, which can be a giveaway that the content has been doctored. Similarly, imperfections in blending facial features can produce an uncanny effect that appears off to the viewer. By paying close attention to these visual subtleties, it is possible to identify anomalies that suggest the presence of deepfake technology. However, the increasing sophistication of these forgeries means relying solely on visual cues may not always suffice.

Audio and Lip Movements

Pay close attention to the audio quality and lip movements. Ensure that the lip-sync aligns seamlessly with the audio, as unnatural lip movements can be a telltale sign of deepfake technology. Additionally, the voice should sound natural without any unusual background noises indicative of spliced content. Subtle mismatches between voice modulation and corresponding lip movements often occur in deepfakes, revealing a lack of harmony between the two.

The nuances of speech patterns, such as tone, pitch, and emphasis, provide further clues; discrepancies can be indicative of audio manipulation. For instance, a deepfake might fail to capture the distinct speech cadences of a well-known figure, leading to an audio-visual mismatch. Moreover, synthetic voices generated through AI often lack the emotional depth and intonation of genuine human speech. By detecting these subtle irregularities, users can unmask deepfake content. Nonetheless, as technology advances, the authenticity of manipulated audio and lip-syncing will keep improving, posing ongoing challenges in detection.

The Role of Cybersecurity Firms

Developing Detection Tools

In response to the growing threat of deepfakes, major cybersecurity firms are actively developing tools to detect these manipulations. Avast Free Antivirus, a leader in the cybersecurity sector, emphasizes that most current cyber threats are rooted in human manipulation. Their expertise and tools are crucial in identifying and combating the subtle artifacts and inconsistencies that deepfakes often contain. By leveraging machine learning and AI, these firms aim to create algorithms that can scan for and identify signs of manipulation more effectively than manual inspection alone.

These detection methods involve analyzing metadata, comparing frame-by-frame consistency, and employing sophisticated pattern recognition techniques to spot irregularities. The development of robust detection software requires continuous research and updates as deepfake technology evolves. Cybersecurity firms recognize the importance of staying ahead of this technological curve to provide effective defense mechanisms. By advancing their detection capabilities, they play a significant role in mitigating the threats posed by deepfakes, thereby safeguarding the credibility of digital content.

Importance of Antivirus Software

Installing a reliable antivirus like Avast Free Antivirus provides a critical line of defense against the myriad of cyber threats prevalent today. By remaining informed and cautious, users can navigate the digital landscape more safely and responsibly. Maintaining updated antivirus protection across all devices in both personal and professional settings is advisable to enhance digital security further. Regular updates ensure that antivirus software can detect and counteract the latest threats, including new variations of malicious code and deepfake content.

Having robust antivirus protection helps mitigate the risk of encountering deepfakes and other cyber threats by identifying potentially harmful content before it can cause damage. Furthermore, educating users about the signs of deepfakes and promoting digital literacy can empower individuals to recognize and respond to such threats more effectively. Cybersecurity is not solely about software but also about fostering awareness and preparedness among users. By combining technological tools with user education, the effectiveness of deepfake detection and prevention can be significantly enhanced.

Staying Vigilant

Trusting Your Instincts

Although indicators can help identify deepfakes, they are not foolproof. Therefore, it is essential to remain skeptical and trust your instincts. If a video seems off, or if the content appears unrealistic, inflammatory, or illogical, it’s prudent to be skeptical of its authenticity. Developing a healthy level of skepticism towards digitally circulated content is crucial in this age of advanced media manipulation. Engaging critically with media, cross-referencing sources, and questioning the plausibility of the information presented are steps individuals can take to protect themselves from deception.

Using fact-checking websites and relying on reputable news sources can also aid in verifying the authenticity of questionable content. Trusting one’s judgment plays an essential role in identifying manipulative and potentially harmful media. Remember, if something appears too sensational or provocative, there’s a reasonable likelihood that it might be altered or entirely fabricated. By staying informed and cautious, users can avoid falling prey to deepfakes and other forms of digital deceit.

Proactive Measures

Deepfakes originally focused on face-swapping, where a celebrity’s face is placed onto another person’s body in a video. The addition of voice synthesis technology has made these deepfakes even more credible by accurately mimicking a celebrity’s voice. This combination of visual and audio manipulation makes it increasingly difficult to detect these fabrications. The high level of realism achieved by merging face-swapping and voice synthesis amplifies the threat of deepfakes, making them a powerful tool for malicious purposes.

What started as simple face-swapping efforts has now evolved into sophisticated forgeries that are hard to tell apart from real videos. With the technology improving, even those with minimal technical expertise can create high-quality deepfakes using easily accessible software. This widespread access suggests the number of deepfakes will likely grow, heightening the risk of these forgeries being used maliciously. To combat this rising threat, it is crucial to stay ahead of the technology and continuously develop new detection methods. Protecting against deepfakes requires constant vigilance and innovation.